关于介绍不多说,可以百度搜搜资料,其中的协议,实现方法,功能等。这里介绍helix的搭建过程。

helix属于RealNetworks公司。官网:www.realnetworks.com

下载helix:

http://helixproducts.real.com/hmdp/software/helixserver/151/mbrs-151-GA-linux-rhel6-64.zip

http://helixproducts.real.com/hmdp/software/helixserver/151/mbrs-151-GA-linux-rhel5-64.zip

这是目前官网最新版本,rhel6在redhat6或者是centos6中安装,rhel5在redhat5或centos5安装。

软件是收费版,可以评估30天,在官网提交资料的时候,授权信息会发送到邮箱,自行下载。

系统环境:centos6.5_64 关闭iptables

[root@localhost ~]# chmod 755 servinst_mobile_linux-rhel6-x86_64.bin //添加执行权限

[root@localhost ~]# ./servinst_mobile_linux-rhel6-x86_64.bin //开始安装

Extracting files for Helix installation……

Welcome to the Helix Universal Media Server (RealNetworks) (15.1.0.393) Setup for UNIX

Setup will help you get Helix Universal Media Server running on your computer.

Press [Enter] to continue… //确认安装

If a Helix Universal Media Server license key file has been sent to you,

please enter its directory path below. If you have not

received a Helix Universal Media Server license key file, then this server

WILL NOT OPERATE until a license key file is placed in

the server’s License directory. Please obtain a free

Basic Helix Universal Media Server license or purchase a commercial license

from our website at http://www.realnetworks.com/helix/. If you need

further assistance, please visit our on-line support area

at http://www.realnetworks.com/helix/streaming-media-support/.

MachineID: e273-990d-c0b5-9a5f-8be8-abaf-f129-6476

License Key File: []: /root/RNKey-Helix_Universal_Server_10-Stream-nullnull-39545068825149354.lic

这个地方输入lic授权信息文件路径,全路径!

Installation and use of Helix Universal Media Server requires

acceptance of the following terms and conditions:

Press [Enter] to display the license text… //显示协议

Choose “Accept” to accept the terms of this

license agreement and continue with Helix Universal Media Server setup.

If you do not accept these terms, enter “No”

and installation of Helix Universal Media Server will be cancelled.

I accept the above license: [Accept]:

继续按回车

Enter the complete path to the directory where you want

Helix Universal Media Server to be installed. You must specify the full

pathname of the directory and have write privileges to

the chosen directory.

Directory: [/root]:/usr/local/helix //安装路径,我这里安装到/usr/local/helix

Please enter a username and password that you will use

to access the web-based Helix Universal Media Server Administrator and monitor.

Username []: //通过web管理helix的用户,下一步设置密码。这里用户名密码都为admin

Please enter SSL/TLS configuration information.

Country Name (2 letter code) [US]: CN //国家缩写

State or Province Name (full name) [My State]: China //州

Locality Name (e.g., city) [My Locality]: QingDao

Organization Name (e.g., company) [My Company]: Rootop

Organizational Unit Name (e.g., section) [My Department]: IT

Common Name (e.g., hostname) [My Name]: venus

Email Address [myname@mailhost]: venus@rootop.org

Certificate Request Optional Name []: certificate //这个地方我瞎写的,不知道该写什么

Helix Universal Media Server will listen for Administrator requests on the

port shown. This port has been initialized to a random value

for security. Please verify now that this pre-assigned port

will not interfere with ports already in use on your system;

you can change it if necessary. These connections have URLs

that begin with “http://”

Port [15112]: //直接回车默认,端口好像是随机的,可以手动指定。 (http)

Helix Universal Media Server will also listen for HTTPS Administrator

requests on the port shown. This port has been initialized to

a random value for security. Please verify now that this

pre-assigned port will not interfere with ports already in

use on your system; you can change it if necessary. These

connections have URLs that begin with “https://”

Port [25780]: //支持https加密连接的端口

You have selected the following Helix Universal Media Server configuration:

Install Location: /usr/local/helix

Encoder User/Password: admin/****

Monitor Password: ****

Admin User/Password: admin/****

Admin Port: 15112

Secure Admin Port: 25780

RTSP Port: 554

RTMP Port: 1935

HTTP Port: 80

HTTPS Port: 443

RTSP Fast Channel Switching API Port: 8008

Server Side Playlist API Port: 8009

Content Mgmt Port: 8010

Control Port Security: Disabled

Enter [F]inish to begin copying files, or [P]revious to

revise the above settings: [F]: F //输入F,确认信息正确开始安装。

Generating SSL/TLS Key file…

Running: ‘OPENSSL_CONF=openssl.cnf Bin/openssl genrsa -out Certificates/key.pem 2048’

Generating RSA private key, 2048 bit long modulus

………..+++

……………….+++

e is 65537 (0x10001)

Generating SSL/TLS Cert file…

Running: ‘OPENSSL_CONF=openssl.cnf Bin/openssl req -new -x509 -key Certificates/key.pem -out Certificates/cert.pem -days 1000 -batch’

Generating SSL/TLS CSR file…

Running: ‘OPENSSL_CONF=openssl.cnf Bin/openssl req -new -key Certificates/key.pem -out Certificates/key.csr -batch’

Copying Helix Universal Media Server files…..

Helix Universal Media Server installation is complete.

RealNetworks recommends increasing the default file descriptor

limits prior to using your Helix Universal Media Server or Proxy. Please

refer to the Installation Chapter of the Helix Systems Integration

Guide for more information on setting File Descriptor limits,

and recommended settings for your system.

If at any time you should require technical

assistance, please visit our on-line support area

at http://www.realnetworks.com/helix/streaming-media-support/.

Cleaning up installation files…

Done.

[root@localhost ~]#

安装完成。

启动服务:

[root@localhost ~]# /usr/local/helix/Bin/rmserver /usr/local/helix/rmserver.cfg &

rmserver.cfg文件为helix的配置文件,里面有端口信息等。

Starting TID 140478784730848, procnum 3 (rmplug)

Loading Helix Server License Files…

Starting TID 140478621152992, procnum 4 (rmplug)

Starting TID 140478613812960, procnum 5 (rmplug)

Starting TID 140478606472928, procnum 6 (rmplug)

Starting TID 140478599132896, procnum 7 (rmplug)

Starting TID 140478591792864, procnum 8 (rmplug)

Starting TID 140478241568480, procnum 9 (rmplug)

Starting TID 140478584452832, procnum 10 (rmplug)

Starting TID 140478234228448, procnum 11 (rmplug)

Starting TID 140478226888416, procnum 12 (rmplug)

Starting TID 140478219548384, procnum 13 (rmplug)

Starting TID 140478212208352, procnum 14 (rmplug)

Starting TID 140478204868320, procnum 15 (rmplug)

Starting TID 140478197528288, procnum 16 (rmplug)

Starting TID 140478190188256, procnum 17 (rmplug)

Starting TID 140478182848224, procnum 18 (rmplug)

Starting TID 140478180751072, procnum 19 (rmplug)

Starting TID 140477631297248, procnum 20 (rmplug)

Starting TID 140477623957216, procnum 21 (rmplug)

Starting TID 140477616617184, procnum 22 (rmplug)

Starting TID 140477609277152, procnum 23 (rmplug)

Starting TID 140477601937120, procnum 24 (rmplug)

Starting TID 140477594597088, procnum 25 (rmplug)

Starting TID 140477587257056, procnum 26 (rmplug)

Starting TID 140477579917024, procnum 27 (rmplug)

Starting TID 140477572576992, procnum 28 (rmplug)

Starting TID 140477565236960, procnum 29 (memreap)

Starting TID 140477557896928, procnum 30 (streamer)

Starting TID 140477550556896, procnum 31 (streamer)

Server has started 2 Streamers…

Version: Helix Universal Media Server (RealNetworks) (15.1.0.393) (Build 10096/377)

看到此类的信息表示服务启动成功。

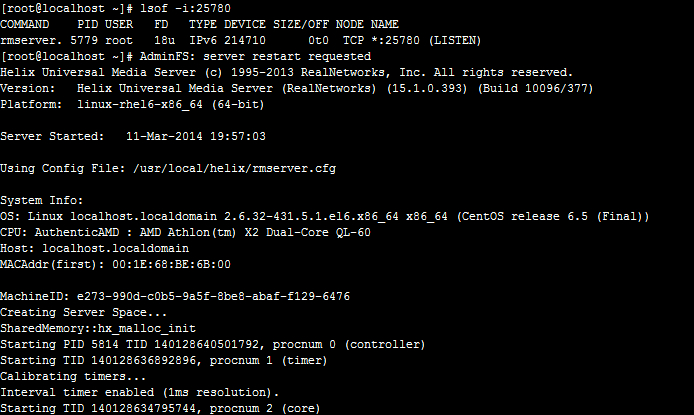

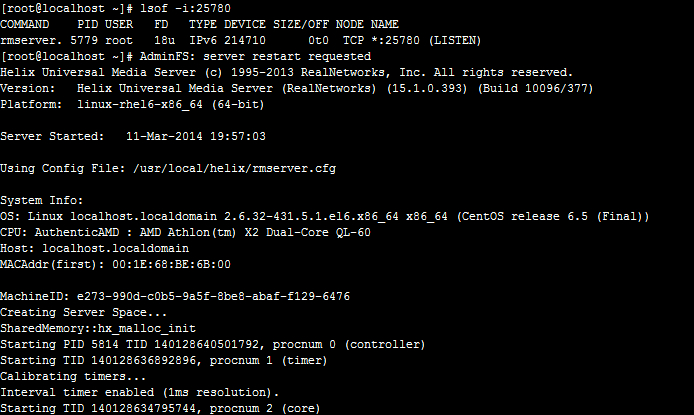

查看指定的端口是否开启:

[root@localhost ~]# lsof -i:15112

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

rmserver. 5779 root 17u IPv6 214708 0t0 TCP *:15112 (LISTEN)

[root@localhost ~]# lsof -i:25780

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

rmserver. 5779 root 18u IPv6 214710 0t0 TCP *:25780 (LISTEN)

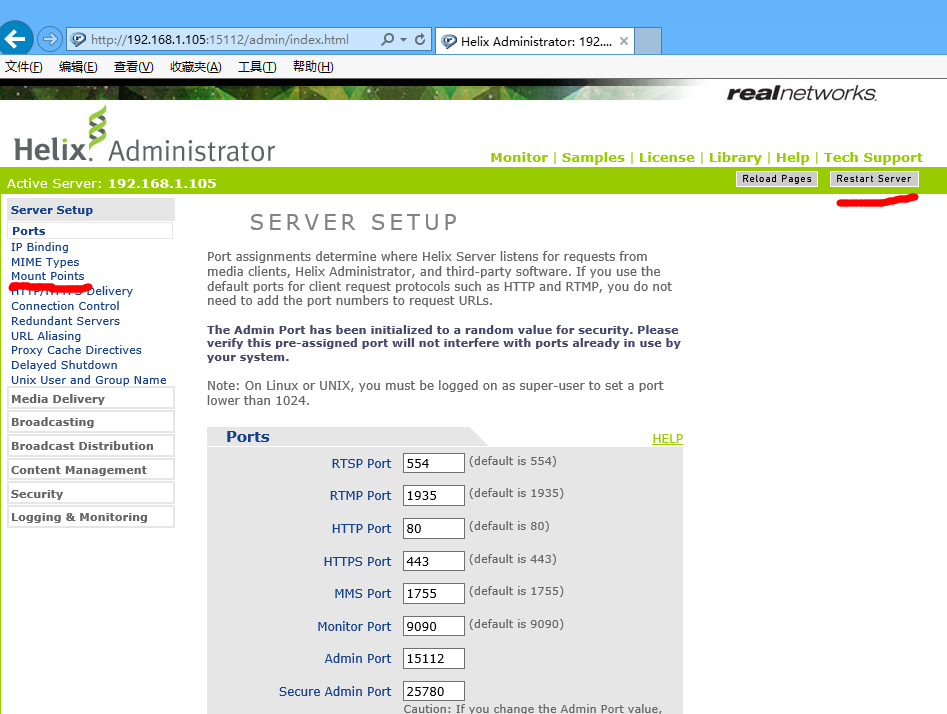

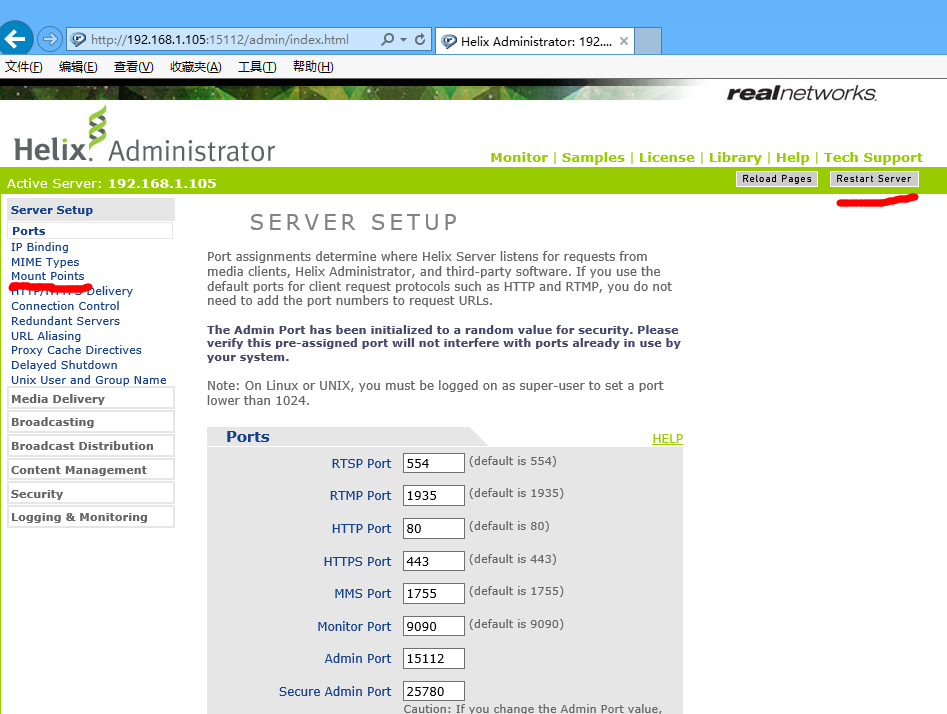

现在登陆管理界面,创建视频库:

普通http连接:

http://192.168.1.105:15112/admin/index.html

admin

admin

https连接:

https://192.168.1.105:25780/admin/index.html

admin

admin

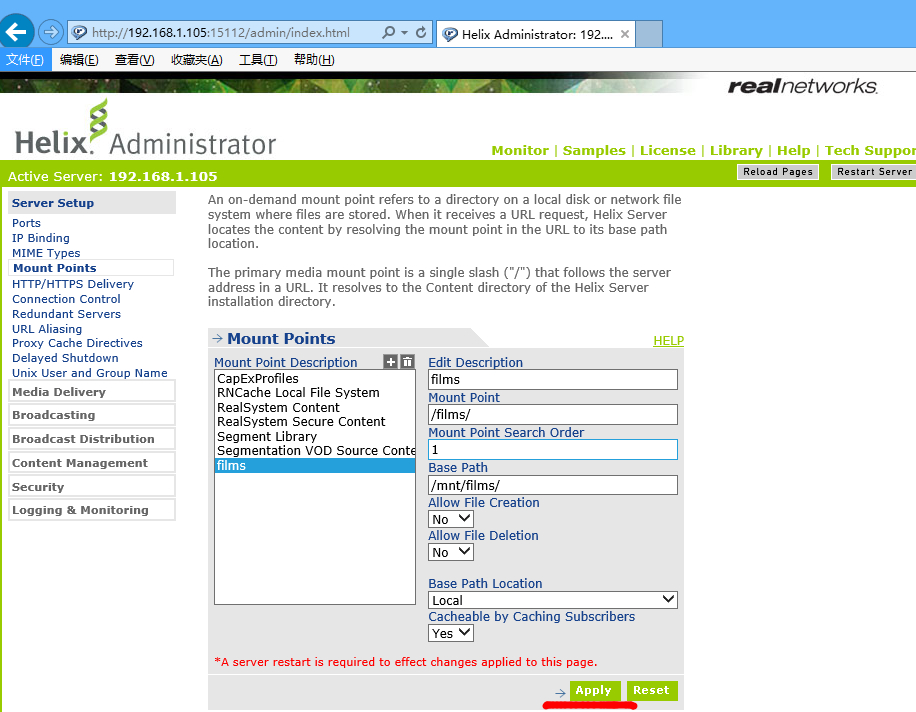

首先将一个rmvb格式视频放到 /mnt/films 下,路径自定义,存放视频。

[root@localhost ~]# ll /mnt/films/2.rmvb

-rw-r–r– 1 root root 526756127 3月 11 18:12 /mnt/films/2.rmvb

登陆以后界面如下:

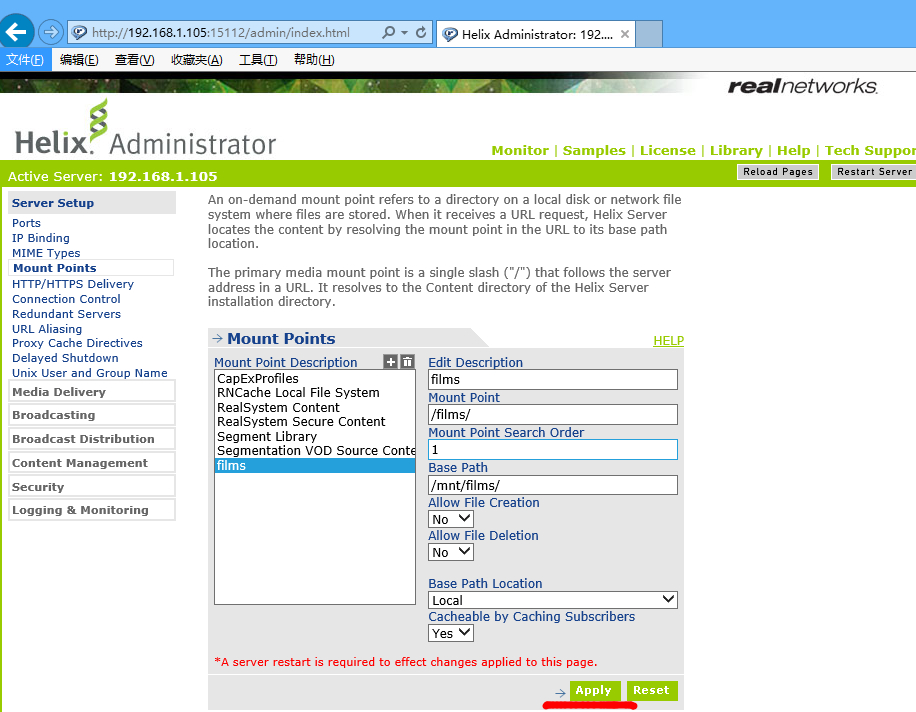

点击server setup – mount points ,点击+号可以创建新的“挂载点”。

Edit Description //描述信息

Mount Point //通过地址浏览时的目录

Base Path //视频物理地址

点击apply应用,提示要重启服务,点击reset server重启。回到shell发现重启。

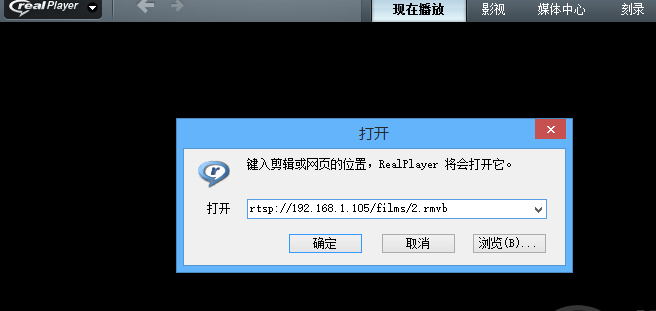

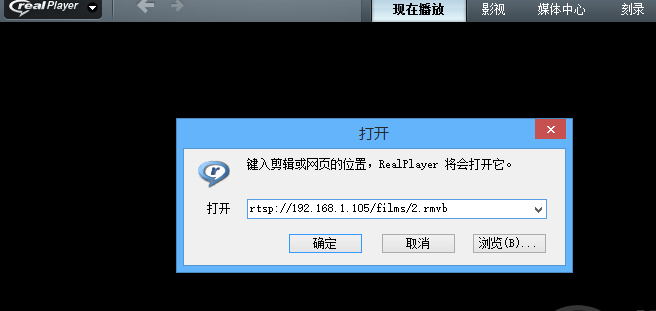

测试:

下载RealPlayer:

打开地址:

rtsp://192.168.1.105/films/2.rmvb

我所知道支持的格式:

3gp rmvb wmv

如果启用了iptables需要将面板中显示出来的端口放行。

至此基础搭建完成。